Nikon, Sony, and Canon Pitch a New Way to Fight Deepfakes

Instead of watermarking AI images, top cameramakers propose encoding signatures into real photos instead.

As realistic AI-generated photos and videos proliferate online, tech firms and watchdog groups are racing to develop tools to identify fake content.

Watermarking computer generated imagery is a commonly proposed solution, adding an invisible flag in the form of hidden metadata that helps disclose that an image was created using a generative AI tool. But researchers have found that such watermarking has major one major flaw: adversarial techniques can easily remove it.

Now, major camera manufacturers are proposing a different approach—the opposite, in a way: embedding watermarks in “real” photographs instead.

Nikon, Sony, and Canon recently announced a joint initiative to include digital signatures in images taken straight from their high-end mirrorless cameras. According to Nikkei Asia, the signatures will integrate key metadata like date, time, GPS location, and photographer details, cryptographically certifying the digital origin of each photo.

Nikon said it will launch this feature in its upcoming lineup of professional mirrorless cameras; Sony will issue firmware updates to insert digital signatures into its current mirrorless cameras; and Canon intends to debut cameras with built-in authentication in 2024, along with video watermarking later that year.

The goal, Nikkei reports, is to provide photojournalists, media professionals, and artists irrefutable proof of their images’ credibility. The tamper-resistant signatures won’t go away with edits and should aid efforts to combat misinformation and fraudulent use of photos online.

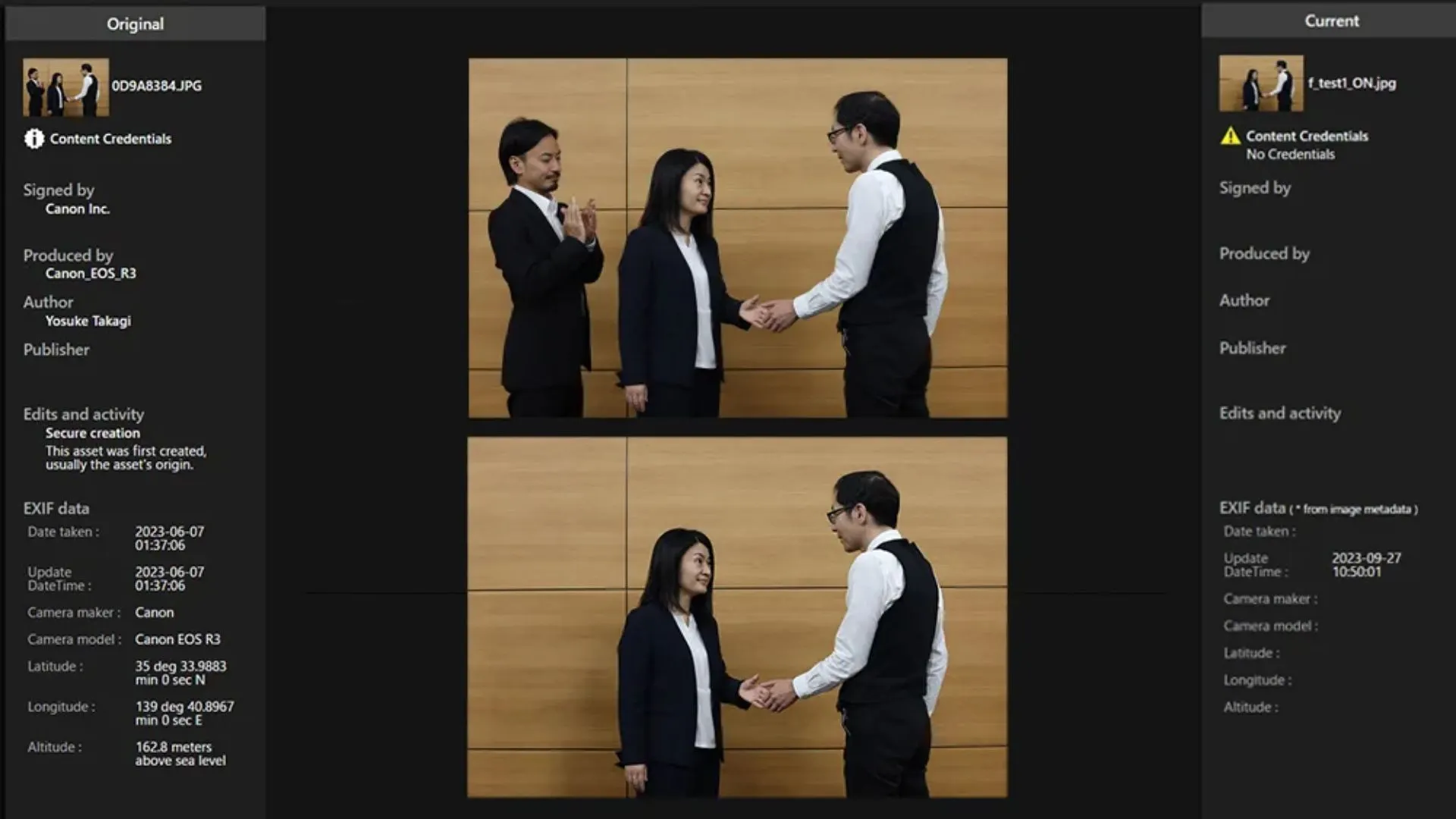

To support this, the companies collaborated on an open standard for interoperable digital signatures named “Verify.” Once in place, photos taken with proper hardware will be able to be checked online for free so people can determine whether a photo is authentic or not.

If an AI-generated photo tries to pass the Verify system without an authentic signature, it gets tagged as “No Content Credentials.”

Instead of retroactively marking AI content, the plan directly authenticates real photos at origin. But like any other watermarking system, its success will depend on widespread adoption (more hardware manufacturers incorporate the standard) and a time-proof implementation (the code evolves to remain unhackable).

As we reported, recent research indicates anti-watermarking techniques could also compromise embedded signatures, rendering current watermarking methods useless. However, this only makes watermarked images unwatermarked, so people have less tools to detect their artificiality.

While anti-watermarking techniques could potentially remove authenticity signatures from real photos, this is less problematic than removing watermarks from AI-generated fake images. Why? If a watermark is stripped from an AI deepfake, it enables the forged content to pass as real more easily. However, if authentication signatures are hacked off real photos, the remaining image is still captured by a camera—it does not originate from a generative model. Although it loses its cryptographic proof, the underlying content is still genuine.

The primary risk in that case is related to attribution and rights management, not the content’s veracity. The image could be miscredited or used without proper licensing, but it doesn’t inherently mislead viewers about the reality it represents

OpenAI recently announced an AI-powered deepfake detector that it claims has 99% accuracy on images. Yet AI detectors remain imperfect and face constant upgrading to outpace evolutions in generative technology.

The recent surge in deepfake sophistication has indeed put a spotlight on the necessity of such strategies. As we’ve seen in 2023, the need to differentiate between real and fabricated content has never been more acute. Both politicians and tech developers are scrambling to find viable solutions, so a little help from these companies is surely appreciated.